Recent researches shows achieving high interpretation performance demands annual reading volumes between 4000 and 10000 mammograms while in Canada the number of mammograms required to remain an accredited radiologist is 1000.

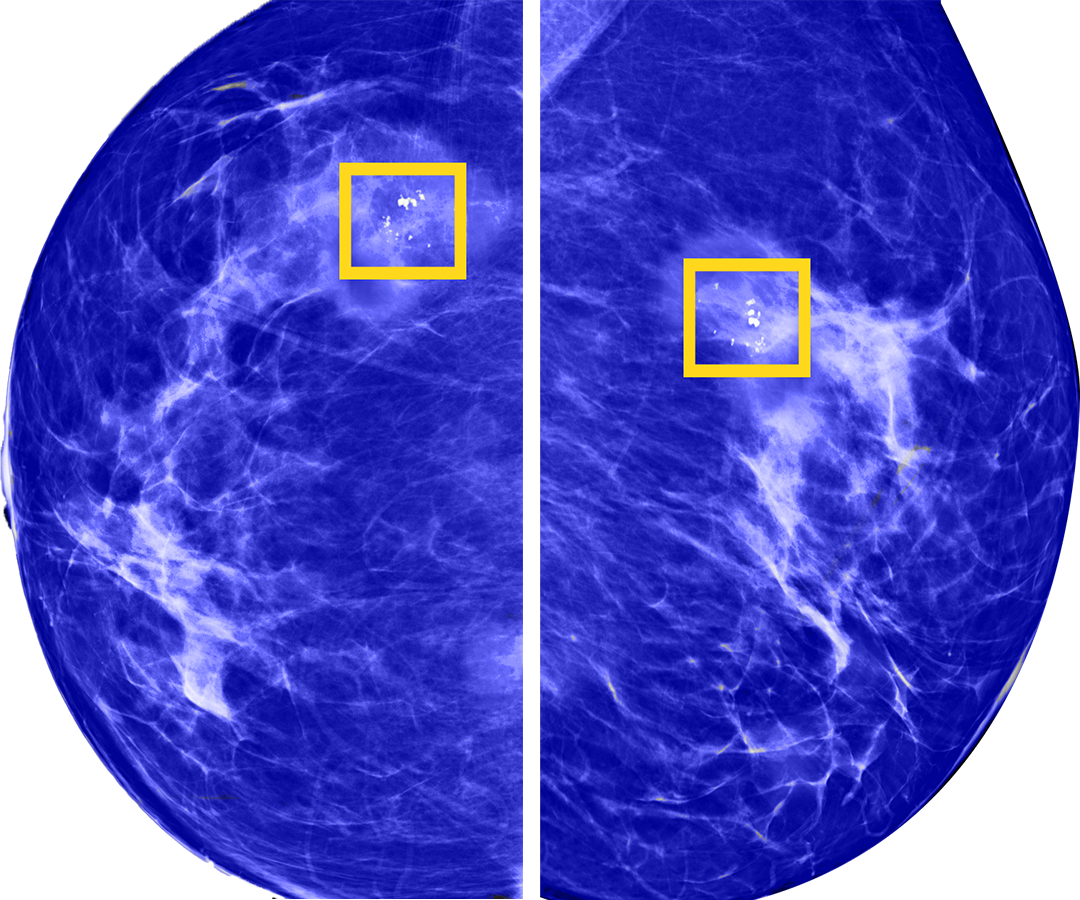

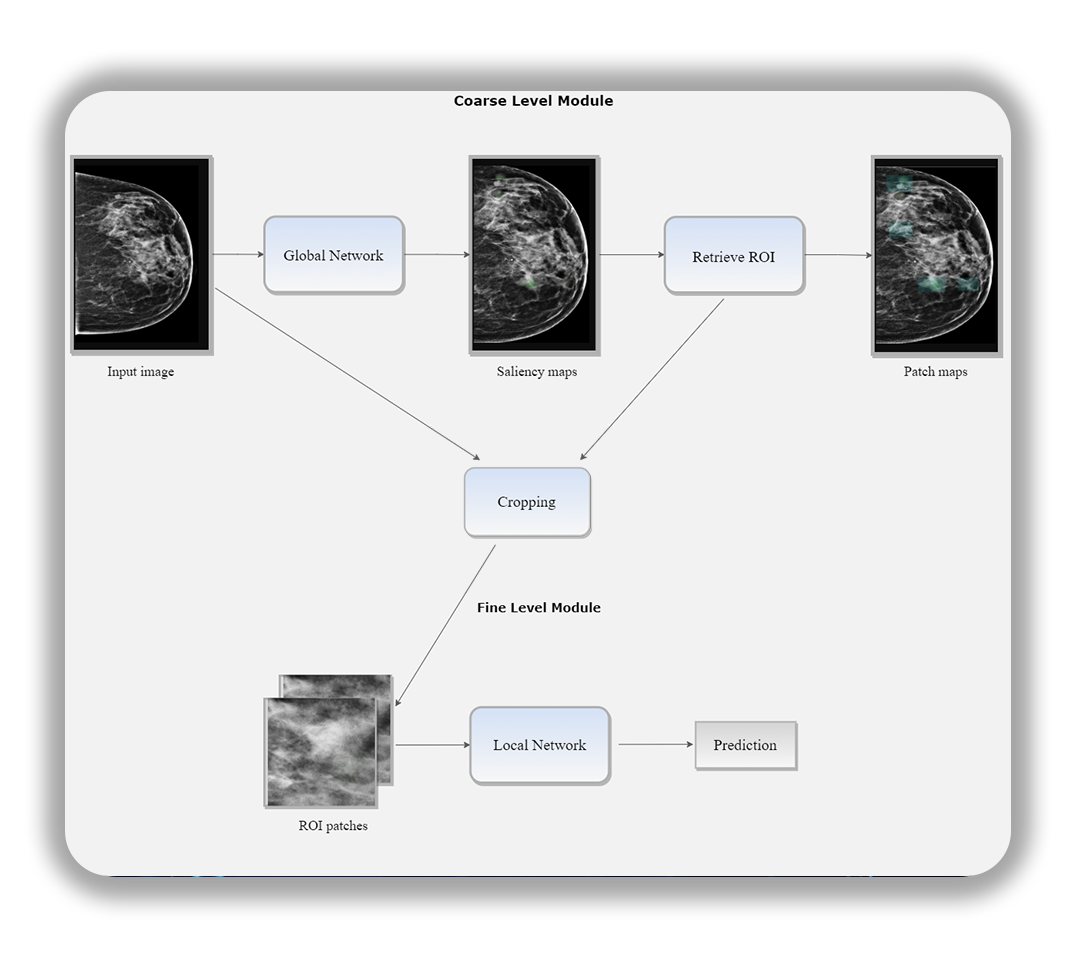

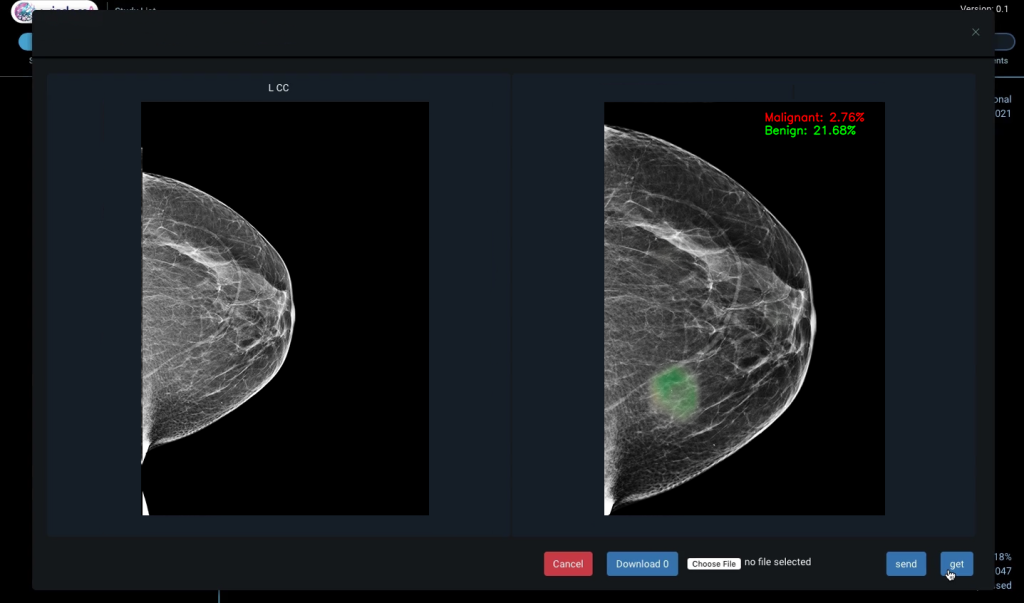

Interpretation of mammography images is a challenging task because these images have high resolution and cancer lesions are small, sparsely scattered over the breast.